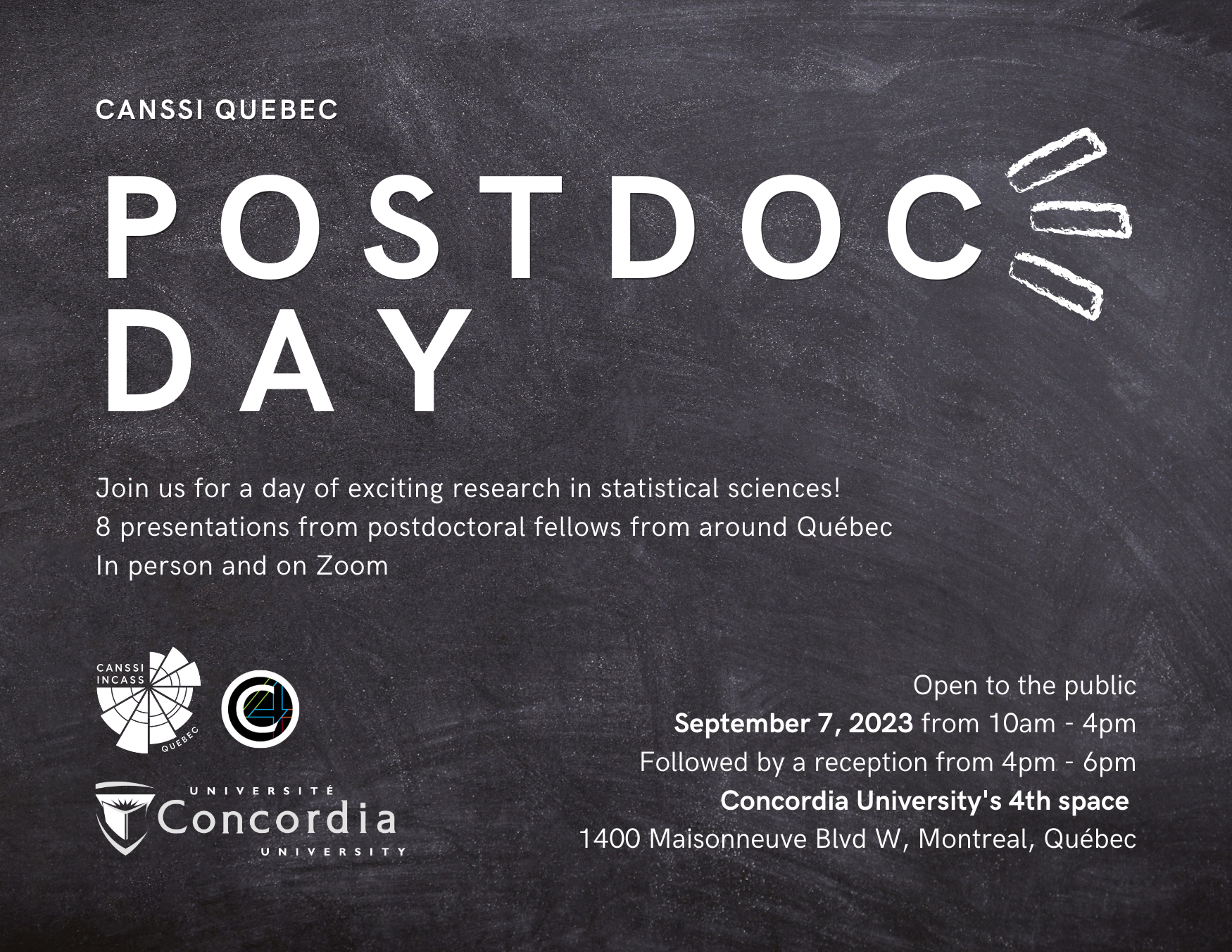

CANSSI (Canadian Statistical Sciences Institute) Quebec hosted its inaugural Postdoc Day on September 7th, 2023. The event took place at Concordia University's 4th Space and featured 8 postdoctoral presenters from various locations in Quebec, showcasing their research in statistical sciences.

The vision for this event came from CANSSI Quebec's Interim Regional Director, Mélina Mailhot, and project coordinator, Christopher Plenzich. Their efforts attracted a strong attendance of over 50 attendees, both in person and online. The event was a great success and has set the stage for future exciting events by CANSSI Quebec.

CANSSI Quebec thanks Concordia University and the 4th Space team for their collaboration.

If you missed out, don't worry! You can catch all the presentations on YouTube.